Hello everybody,

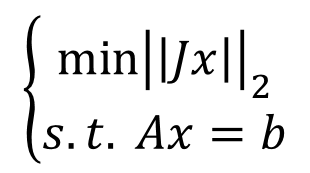

I am working with an optimisation problem as the following:

where J is a T x N matrix, x is a one-column vector with dimension n, A is a M x N matrix and b is a one-column vector with dimension M.

I try to solve it using cvx with the following sequence:

cvx_begin

variable x(NNodes);

minimize ((norm(J*x,2)));

subject to

A*x == b;

cvx_end

My question is related to get a solution of this problem. In most cases, when I work with a problem like this, I have to normalise all matrices by the frobenius norm of one of them to get a good solution. But this does not always work (it even produces different results depending on the frobenius norm that I use, i.e. the solution if I use the frobenius norm of J is different than if I use the frobenius norm of A. In addition, in some cases it is not necessary to normalize any matrix.

I can not understand why normalise by one or the other matrix produces different solutions. The problem is essentially the same regardless of the solution (as far as I understand). Can somebody help me with this issue?

Thanks,

Jose