Dear all,

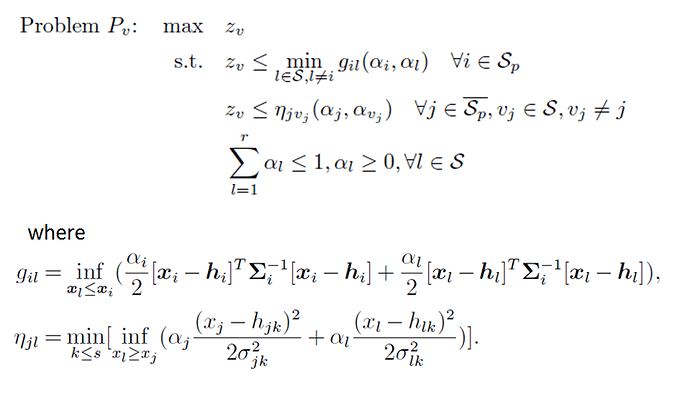

I’m trying to solve a nested/multi-level convex optimization problem as below.

The decision variable includes z_v, alpha_1, …, alpha_r. (S = {1,2,…r}) The function g_il and eta_jv_{j} are concave functions with respect to corresponding alphas.

I defined incomplete specifications for the function g_il and eta_jv_{j} as following.

function cvx_optval = g_il(alpha_i,alpha_l,h_i,h_l,sigma_i_inv,sigma_l_inv)

[s,~] = size(h_i);

cvx_begin

variables x_i(s) x_l(s);

minimize(0.5alpha_iquad_form(x_i-h_i,sigma_i_inv) + 0.5alpha_lquad_form(x_l-h_l,sigma_l_inv));

subject to

x_l <= x_i;

cvx_end

function cvx_optval = min_g_il(alpha,i,h,sigma_inv)

[r,s]=size(h);

cvx_optval = 100000;

for l = 1:r

if l~=i

cvx_optval = min(cvx_optval,g_il(alpha(i),alpha(l),h(i,:)‘,h(l,:)’,sigma_inv{i},sigma_inv{l}));

end

end

function cvx_optval = eta_jl(alpha_j,alpha_l,h_j,h_l,sigma_j,sigma_l)

[s,~]=size(h_j);

cvx_optval = 100000;

for k = 1:s

cvx_begin

variables x_j x_l;

minimize(0.5alpha_j(x_j-h_j(k))^2/sigma_j(k)^2 + 0.5alpha_l(x_l-h_l(k))^2/sigma_l(k)^2);

subject to

x_l >= x_j;

cvx_end

cvx_optval = min(cvx_optval, 0.5alpha_j(x_j-h_j(k))^2/sigma_j(k)^2 + 0.5alpha_l(x_l-h_l(k))^2/sigma_l(k)^2);

end

In addition, the outer optimization problem (problem P_v) is as below

function cvx_optval = sub_cvx_prob(h,sigma,sigma_inv,v,nonPareto)

[r,s] = size(h);

cvx_begin

variable z;

variable p(r) nonnegative;

maximize(z);

subject to

for i = 1:r

if not(ismember(i,nonPareto))

z <= min_g_il(p,i,h,sigma_inv);

else

l = v(find(i==nonPareto));

z <= eta_jl(p(i),p(l),h(i,:)‘,h(l,:)’,sigma(i,:)‘,sigma(l,:)’);

end

end

sum(p) <= 1;

cvx_end

The problem is when I substitute numerical alpha into the g_il function, it works well. But when the problem is nested, then it raises the error

" Cannot perform the operation: {real affine} .* {convex}"

I found rare posts related to nested/embeded/multi-level convex optimization and the DCP rule sets seem a little tricky for me currently. Could anyone can help me figure out this problems? Please let me know if you want any further information. Thanks in advance.

Best,

Weizhi