clc

clear

close all;

%% Initialization of parameters & channel setup

K=5;%Number of users

Nru=2;%Number of RUs

N_pkts=randi([1 5],K,1);

w=N_pkts;

Tsls=zeros(K,1);

global ap_ant;

ap_ant=8;

M=ap_ant;

Nrx=8;

Pt_dB=30;%uplink transmit power in dBm

Pt=10^(Pt_dB/10);

%Path-loss at d0

d0=1;%in meters

c=3e8;%in m/s

fc=5e9;%in Hz

lambda=c/fc;

PL0=(lambda/(4pid0))^2;

PL0_dB=10*log10(PL0);

a=3.8;%pathloss exponent

dmax=20;%in meters

PL=zeros(1,K);

PL_dB=zeros(1,K);

d=zeros(1,K);

for k=1:K

d(k)=randi([2,dmax]);%user distance in meters

PL(k)=PL0*(d0/d(k))^a;

PL_dB(k)=10*log10(PL(k));%pathloss in dB

end

Kc=1.380649E-23;

T=290;%in kelvin;B=160e6;%MHz

N_sc=106;%Number of subcarriers in an RU

B_sc=78.125e3;%Subcarrier bandwidth

B_ru=N_scB_sc;

sigma2=KcTB_ru;

sigma2dB=10log10(sigma2*1e3);%%noise power in dBm

%channel matrix

%Y=zeros(Nru,K,K);N=zeros(Nru,K,K);n=zeros(K,Nru);

G=zeros(Nru,K,K);

%Generation of channel matrix

H=zeros(Nru,Nrx,K);

for r=1:Nru

for k=1:K

H(r,:,k)=(sqrt((PL(k))/2)).(randn(Nrx,1)+1irandn(Nrx,1));

end

end

% H=abs(H);

%% cvx solver

cvx_begin

cvx_solver mosek

variable y(K,Nru);

C2=;

C3=;

for r=1:Nru

for k=1:K

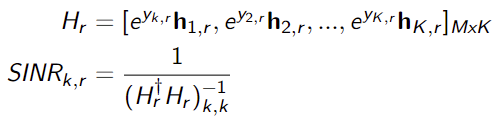

Hr=reshape(H(r,:,:),Nrx,K);

Xr=reshape(exp(y(1:K,r)),1,K);

XH=repmat(Xr,Nrx,1);

Heq=XH.*Hr;

Hi=real(inv(Heq’Heq));

SINR(k,r)=1/(sigma2(Hi(k,k)));

R(k,r)=log(1+SINR(k,r));

end

C3=[C3 sum(exp(y(k,:)))];

end

for r=1:Nru

C2=[C2;sum(exp(y(:,r)))];

end

maximize(sum(R(k,r)),“all”);

subject to

exp(y(:,:))<= ones(K,Nru);

C2<=M.*ones(Nru,1);

C3<=1.*ones(1,K);

cvx_end

exp(y)

I have verified the objective function and the constraints are convex and

I see the below error message.

Error using .*

Disciplined convex programming error:

Cannot perform the operation: {log-affine} .* {complex affine}

Error in zf_cvx_resource_allocation (line 70)

Heq=XH.*Hr;

Please help to find out if this is accepted in CVX?

If this is not accepted format, can you please suggest any other format to solve this?